Last week, in my post about CI/CD, I brought up Docker. Docker can be used to create an “image”, which is a series of layers that are built upon each other. For example, you can create an image of the Ubuntu operating system. From there, you can define your own image with Python pre-installed as a second layer. When you run this image, you create a “container”. This is isolated and has everything installed already so that you, or anyone else on your development team, can use the image and know reliably that it has all necessary dependencies and is consistent.

Of course, Docker will get much more complicated and images will tend to have many more layers. In projects that run on various platforms, you will also have images that are built differently for different versions.

So how does this apply to CI/CD? Docker images can be used to run your pipeline, build your software, and run your tests.

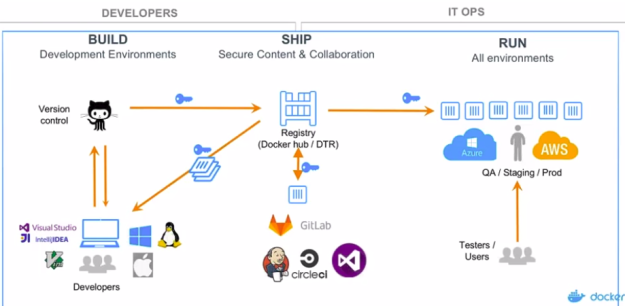

The company released a Webinar discussing how CI/CD can be integrated with Docker. They discuss the three-step process of developing with Docker and GitLab: Build, Ship, Run. These are the stages they use in the .gitlab-ci.yml file, but remember you can define other intermediate stages if needed. The power of CI/CD and Docker is apparent, because “from a developer’s perspective, all you have to do is a ‘git push’ — and that’s it”. The developer needs to write the code and upload to version control and the rest is automated, with the exception being human testers who give feedback on the deployed product. However, good test coverage should prevent most issues and these tests are more about overall experience.

From Docker Demo Webinar, 4:49

Only five lines of added code in .gitlab-ci.yml are necessary to automate the entire process, although the Docker file contains much more detail about which containers to make. The Docker file defines the created images and the code that needs to be run. In the demo, their latest Ubuntu image is pulled from a server to create a container, on which the code will be run. Then variables are defined and Git is automated to pull source code from the GitLab repository within this container.

Then, a second container is created from an image with Python pre-installed. This container is automated to copy the code from a directory in the first container, explained above. Next, dependencies are automatically installed for Flask, and Flask is run to host the actual code that was copied from the first image.

This defines the blueprint for what to be done when changes are uploaded to GitLab. When the code is pushed, each stage in the pipeline from the .gitlab-ci.yml file is run, each stage passes, and the result is a simple web application already hosted from the Docker image. Everything is done.

In the demo, as should usually be done in practice, this was done on a development branch. Once the features are complete, they can be merged with the master branch and deployed to actual users. And again, from the developer’s perspective, this is done with a simple ‘git push’.

From the blog CS@Worcester – Inquiries and Queries by ausausdauer and used with permission of the author. All other rights reserved by the author.