Test Driven Development (TDD), like many concepts in

Computer Science, is very familiar to even newer programming students but they

lack the vocabulary to formally describe it. However, in this instance they

could probably informally name it: trail-and-error. Yes, very much like the

social sciences, computer science academics love giving existing concepts fancy

names. If we were to humor them, they would describe it in five-ish steps:

- Add

test - Run

tests, check for failures - Change

code to address failures/Add another test - Run

tests again, refactor code - Repeat

The TDD process comes

with some assumptions as well, one being that you are not building the system

to test while writing tests, these tests are for functionally complete

projects. As well, this technique is used to verify that code achieves some

valid outcome outlined for it, with a successful test being one that fails,

rather than “successful” tests that reveal an error as in traditional testing.

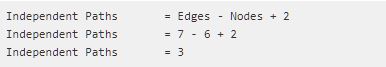

Related as well to our most recent classwork, TDD should achieve complete

coverage by testing every single line of code – which in the parlance of said

classwork would be complete node and edge coverage.

Additionally, TDD has

different levels, two to be more precise: Acceptance TDD and Developer TDD. The

first, ATDD, involves creating a test to fulfill the specifications of the

program and correcting the program as necessary to allow it to pass this test.

This testing is also known as Behavioral Driven Development. The latter, DTDD, is

usually referred to as just TDD and involves writing tests and then code to

pass them to, as mentioned before, to test functionality of all aspects of a

program.

As it relates to our coursework, the second assignment involved writing tests to test functionality based on the project specifications. While we did not modify the given program code, at least very little, we used the iterative process of writing and re-writing tests in order to verify the correct functioning of whatever method or feature we were hoping to test. In this way, the concept is very simple, though it remains to be seen if it stays that way given different code to test.

Sources:

Guru99 – Test-Driven Development

From the blog CS@Worcester – Press Here for Worms by wurmpress and used with permission of the author. All other rights reserved by the author.